An Application Programming Interface is a general way of describing a set of functions or methods that allow developers to interface with the application, service, or technology in question. In the programming world, APIs and their documentation are entrenched in day to day life and are a part of every programmers workflow.

APIs can take an imeasurable number of forms, but among all the many forms they take is the constant idea that they are designed to make a technology that has been developed accessible to others. They allow for a consitent way of interfacing with other developers and are usually very well documented with examples and technical manuals (this is because if someone goes through the trouble of developing an API, it’s most likely because they want someone else to have access to it, and as such, usually explain how that access works.)

Here are some examples of the APIs I use in my daily workflow:

So what does it mean to consume an API?

Typically, when a developer is working with an API, they’re working with a set of tools or in a programming language that the API is compatible with. As I mentioned before, the number of combinations of APIs and consumers of APIs is immeasurable because not only are there many many APIs already out there, but developers are constantly making new APIs every day.

For this example, I’ll go with something I’m familiar with and explain the consumption of one of the Microsoft.NET API statements I use frequently.

Microsoft.NET is a language that at it’s core has a component called the Common Language Runtime. This allows for anyone to write their own languages that connect the syntactical differences of other languages with Microsoft’s Intermediary Language (MSIL) so that it can run through the CLR. For this example, I’ll be using the language Visual Basic.NET.

Programming Languages are made up various components. Keywords, Statements, Functions, API Calls, Declarations, Variables, and many other components. In the following code example, I’ll seperate out the language from the API to clearly show what I mean by Application Programming Interface.

In Microsoft’s development of the .NET Language, they did alot of lower level ground work for developers so that they didn’t have to reinvent the wheel to accomplish common tasks. This is really the benefit of an API, they spent the time and research into developing the internal specifics of the language and it’s goals so that we could simply arrange those components in a way we find useful to ourselves.

Take for example the need to determine the name of the computer an application is running on. If I have a programming language that’s running on top of a runtime designed to execute that language, in order to find out the name of the computer I’m running on I would need to leave the bounds of my program and venture into the operating system’s information to do so. Microsoft has given .NET Developers a simple way to do this in their programs through means of an API that allows access to the Machine’s Name on the current Operating System.

In order to access the computer’s name within the .NET Framework, I would simply use the following code:

strComputerName = My.Computer.Name

“My.Computer.Name” is the API Microsoft has made available in order for developers to interface with the operating system to determine the name given to the computer. It is well documented (as are all their APIs) and the documentation contains everything necessary for the knowledgeable developer to use them.

Everything on your computer screen uses an API, at the most basic level, programs interface with the operating system just to run themselves through the OS’s API. In fact, even the OS interfaces with a sort of hardware API through the hardware abstraction layer so it can run. And these days, Web 2.0 sites are extending the idea of APIs out of our computers, and across the internet by making entire websites available through the same type of well documented API.

There are two very common API technologies used on the internet that I’ll be working with in this discussion. They are RSS feeds and XML Web Services. Both technologies are based off XML, which became important as the number of differing operating systems and information sources grew rapidly, and developers needed an agreed upon standard way of communicating with eachother.

We’ve all seen examples of RSS feeds, but here is an example of a Web Service. I created this sample service to add two numbers together and return the result in XML. The resulting function AddNumbers can be consumed by anyone on the internet, and my application’s logic (simple as it may be in this case) can easily be integrated with the development efforts put forth by others.

There are many services out there that offer to ease the development of integrated APIs. One such tools, Yahoo Pipes allows users to merge many types of data sources and perform relatively complex operations on those sources to produce an output tailored to each individual application.

As an example of the usefulness and ease at which you can integrate these diverse services, I have create a pipe that uses my sample XML Web Service that adds to numbers to mashup information from an Airline Travel provider to give me a custom set of data. In this example scenario, I have a service that tells me the price it can get for an Airline ticket provided some input. Now, I don’t actually have a service that does this, but for my example purposes, imagine that my service which adds two numbers would do this for me. So I’ll provide it with the airfare cost the deal RSS feed tells me, and it’ll report back to me the price at which it can give it to me.

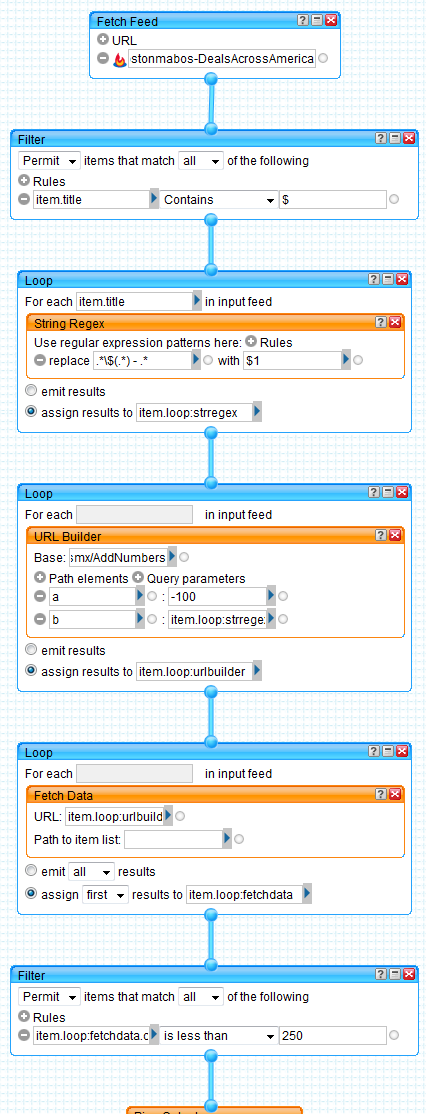

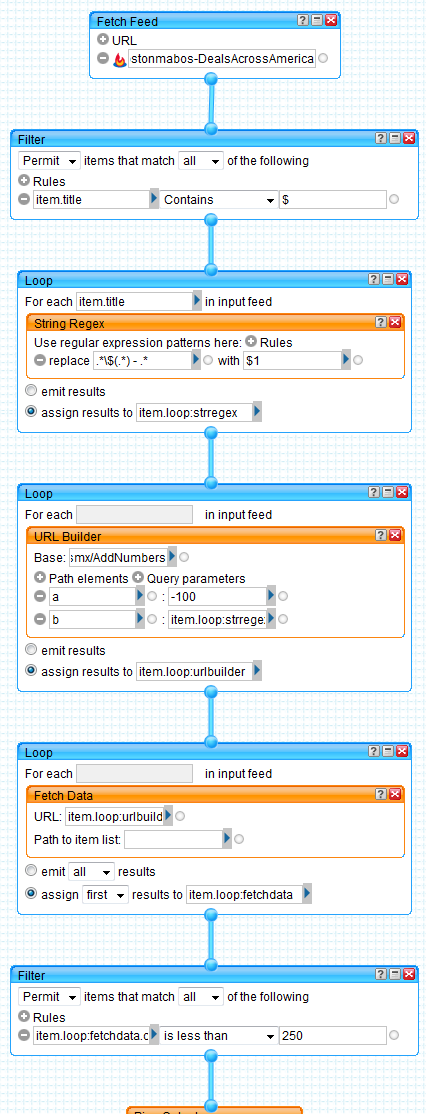

As you can see here in my pipe, I begin by selecting the feed:

Boston Deals Across America

I then filter out all non sale items, since I’m only interested in items with pricing information. After that, I use a regular expression to extract just the pricing information from the title.

Next, I build a request that will be sent to my Pricing Web Service (in this case, the Web Service that adds two numbers,) and then submit the results to my Web Service:

Add Numbers Web Service

The Web Service returns a price (which would be the price it could offer me the fare for if this were real) and I then filter off that to find deals that are less than $250.

As you can see, I integrated two very distinct services that could be used for very different things to reach a very specific goal that helps me, which is to find flights to or from Atlanta based on special pricing that ends up being less than $250

So, with available APIs to just about anything and everything, we now have within our reach, the possibility to integrate these applications with eachother in the form of mashups or other custom development efforts that tie two or more of these technologies together.

Some of the more useful ones I’ve found are Microsoft Virtual Earth integrating with Weather.com to make an interactive weather map that lets you zoom in to a very precise location and level to view the radar information for your street if you want. Weather Underground has done something similar with Google Maps, which shows a realtime map of current reported temperatures and wind speeds.

Another very useful mashup I’ve used is the Texas Department of Transportations integration with Google Maps to show near realtime display of all reported traffic related incidents in the DFW Metroplex.

From a design perspective, this is an extremely useful trend of technologies. In fact, in one of my recent contracts, I was tasked to display the location of earthquakes that I had processed damage estimation off of by integrating with XML APIs provided by the University of Alaska for the Trans Alaskan Pipeline.

Instead of having to graphically render the location of each reported earthquake through my own graphic APIs, I was able to use Google Map’s URL based API for displaying a map at a particular location and in a particular view. This allowed me to cut the development time of this specific area and functional requirement of the site drastically, saving me the effort of recreating what Google had already done (and done better than I could have.)

APIs are everywhere and essential. With their extension to the internet in the form of consumable RSS feeds and interactive XML web services, the possibilities for design and development are limitless. We can reuse complicated processes with relative ease and combine them in many new and exciting ways and spend more time concentrating on our site’s design, and less time reinventing the work of others.